Chatbots and virtual assistants are becoming increasingly common in the modern business landscape. Organizations rely on customer-facing bots to provide high-level client support, as well as internal virtual assistants to answer employee questions and streamline operations. This basically puts chatbots front and center for your strategy — these pieces of software are often the first entities your users will engage with, and so they really need to be up to the job.

With the right chatbot on your side, you will be able to meet — and even surpass — the expectations of these users, both customers and employees alike. But what does this “right” chatbot look like? What can a virtual assistant do, and what should it do?

Harnessing Natural Language Processing

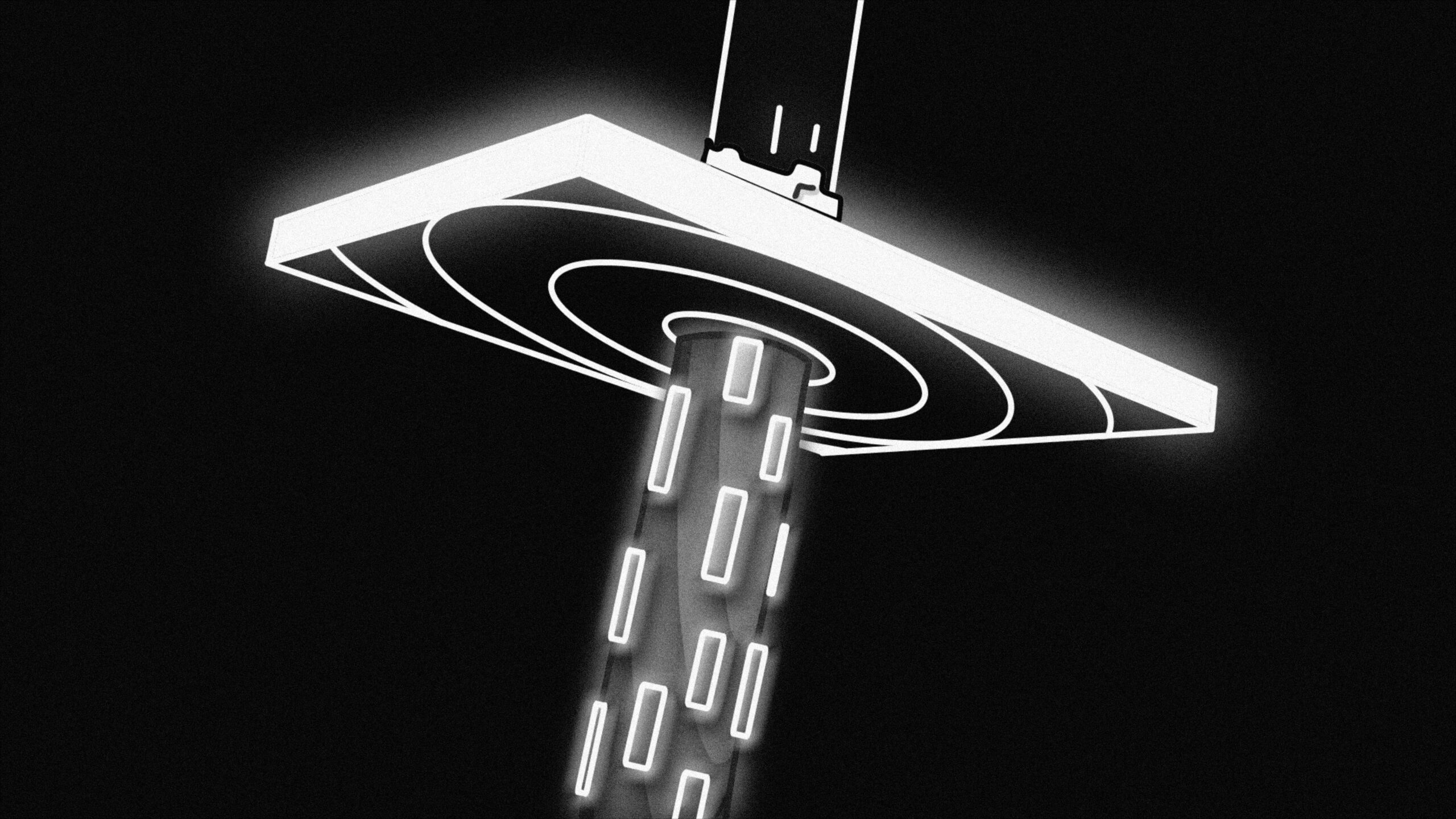

Natural language processing or NLP is helping to elevate chatbot technology, and AI technology in general, to a new level of effectiveness. In a basic sense, NLP mimics human speech, supporting machines as they interact and converse with human users and operators in an organic manner. This requires two key capabilities — being able to understand and organize a human speech or text input and then being able to respond with a machine-generated speech or text output.

This is critical to the success of your chatbot. One of the traditional frustrations associated with virtual assistant technology has been a disconnect in understanding — users have often received the wrong response to their query, or had to repeat the query numerous times to be understood. Another frustration related to lack of empathy and engagement — it’s difficult to feel engaged or satisfied by a business when you are met with prosaic and artificial-sounding answers.

Modern NLP technology utilizes grammatical tagging to recognize individual parts of speech, as well as contextualization to find the meanings of words with multiple definitions. This is supported by aspects such as named entity recognition (NEM), which picks out key sources of meaning, such as location data or the names of specific products.

All of this is used to cognitively process the meaning of an input before an empathetic and organic-sounding response is delivered.

Replacing Rule-Based Models with Cognitive Technology

Chatbots and virtual assistants have been using rudimentary AI for quite some time, achieving a degree of understanding and carrying out basic interactions. In 2022, however, your solutions need to be going way beyond this basic level — rule-based AI is simply not cutting it anymore and needs to be replaced with more cognitive tech.

Rule-based AI refers to artificial intelligence that is based on a set of pre-programmed rules. Basically, the AI is static and relies upon a framework that a human operator has built into the coding. For example, if criteria A is met, then response B is delivered. The model can be more complex than this, but this simple example gives a good idea of how rule-based AI works.

The problem with rule-based AI is that it is inflexible and unsuitable for more complex queries. This is because your human programmers will need to input all of the trigger phrases and prepared responses ahead of time, so there is no room for variation or nuance. This is fine if you are dealing with just a few user inputs, which can be answered with a pre-prepared paragraph of text, but it is going to cause frustration and irritation for users in search of more personalized support. Your chatbot needs to utilize cognitive technology, actively understanding the problem from the customer’s point of view and delivering the proper responses based on this.

Learning from Interactions

A major disadvantage of the rule-based model is that the chatbot cannot grow its understanding over time. As it is simply delivering prepared responses from a centralized database, it is not learning about the needs of customers. Modern chatbot technology can draw data from its interactions with users, honing the quality of its responses as a result.

For example, let’s say your customer needs support with video editing software and uses the word “clip.” In this context, clip might refer to a particular snippet of video, or the customer might mean the process of trimming or “clipping” the video down to size. This may cause semantical confusion for the chatbot, but the software can use context to understand the customer’s intention. From here, the software retains the data relating to this interaction and uses it to achieve a swifter and more complete understanding in future interaction. This results in a self-sustaining chatbot that becomes more effective over the duration of its deployment.

Undergoing Structured Training

Doing away with a rule-based model of AI does not mean there is no programmer input whatsoever. You can accelerate the learning process — and make this process more effective — by working with your chatbot, coaching it and training it as it grows its understanding. As virtual assistants can now replicate the cognitive processes of human operators, you can apply similar training processes to your chatbots in order to make them more effective.

Just like a human operator, your chatbot is still self-sustaining — it will still grow its experience and its knowledge with every live interaction. However, structured training ensures that the chatbot has the data required to meet the needs of customers and other users in specific situations. There may also be highly specialized interactions that your chatbot needs to be aware of ahead of time. With training input, you can make sure that the bot is able to answer these queries and navigate these interactions with a high degree of success. Ongoing monitoring and assessment will also help you to identify where additional training needs to be delivered.